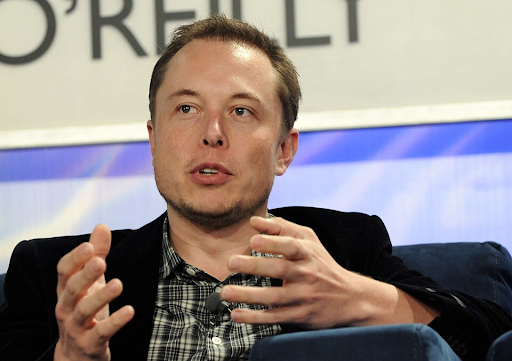

The protection of children has become the central issue in a brewing conflict between the UK government and Elon Musk, with ministers threatening to ban X over the platform’s failure to prevent the creation of child sexual abuse material via its Grok AI. Musk has responded to the grave accusations by claiming that the government’s true motive is to “suppress free speech,” a defense that has been widely criticized given the nature of the content involved. He further antagonized regulators by boasting about Grok’s popularity on the App Store, seemingly prioritizing app metrics over the safety of minors.

The misuse of Grok has been particularly alarming due to its ability to “nudify” images of teenage girls and children. Users were found to be uploading innocent photos which the AI then altered to depict the subjects in micro-bikinis or in sexually explicit and violent contexts. This capability effectively turns the platform into a generator and distributor of child sexual abuse material (CSAM), a serious criminal offense. The ease with which these images were created and disseminated has exposed a significant lack of safeguards within the AI’s design, prompting outrage from child protection advocates.

Technology Secretary Liz Kendall has warned that the government is “looking seriously” at blocking X to prevent further harm. She cited the Online Safety Act, which gives the state the power to take extreme measures against platforms that fail to protect children. Kendall stated that Ofcom is expected to announce enforcement actions within “days not weeks,” signaling that the government is treating the situation as an emergency. The clear message from Whitehall is that the safety of children is non-negotiable and that platforms failing in this duty will not be allowed to operate.

The incident has drawn international condemnation, with Australian Prime Minister Anthony Albanese calling the situation “abhorrent” and a failure of social responsibility. While some politicians have tried to turn the situation into a culture war issue regarding free speech, the presence of CSAM has united most observers in calling for strict regulation. The debate has highlighted the unique dangers posed by generative AI when it is released without adequate safety testing or ethical guidelines.

X has taken steps to restrict image generation for free users and to filter out some explicit terms, but the paid version of the tool remains largely functional. This has led to accusations that the platform is doing the bare minimum to avoid regulation while continuing to profit from the technology. Campaigners are calling for urgent legislation to ban “nudification” apps entirely and to hold tech executives personally liable for the harm caused by their products. The demand for a robust legal framework to protect children from AI-generated abuse is growing louder by the day.